I haven't forgotten this blog - I've been working on a new IP paper over the past few months, so haven't had the energy to post anything else IP related.

My paper, which I'm 1/2 done, outlines the problems IP Managers have with getting a business focused IP strategy done within the bureaucracy of the legal world they work in. I have come up with a new model that will hopefully address these issues.

Thursday, February 15, 2007

The Balance of Intellectual Property, Part 1

Posted by

Peter Cowan, P.Eng, MBA

at

10:39 PM

3

comments

![]()

Friday, October 13, 2006

The Social Patent Process

There is a considerable amount we, as IP Managers or Attorney's, can learn about patent process from the Early Adopter internet geeks in this world.

Last month I read about Social Networking still being an Early Adopter technology. Upon reflection, I think there is a valuable patent process based idea we can take away from this.

One of the larger (useful) social networking information sites is digg.com – a social networking site that combines social bookmarking, blogging and syndication – but all in a democratic environment. Users can submit stories and “digg” other users stories. Through a real-time user based ranking system, if the story gathers enough “digg’s”, depending on the algorithm that runs in the background, the best stories make it to the front page. There are specific categories (Technology, Science, World & Business, Sports, Entertainment, Gaming, and Video’s), each with sub-categories. Listed in the top 100 most visited sites on the internet by Alexa, it claims over 500,000 registered users and 3000+ submitted stories per day. To get to the front page a story typically needs 350-600 “diggs” to get to the front page, the range dependant on the time of the day and week a story is posted.

Now you may be wondering, how does this relate to an IP process? In short: Idea screening and ranking. Many companies have ranking committees, all filtering out the good ideas from the bad. Often several different departments are included, perhaps IP counsel, and an IP Manager. For mid to large firms that have a large amount of innovations submitted for patenting, the ranking process that is controlled by the few on the ranking committee, which means these few have to sort and filter a large number of ideas. But is this process skewed?. Recently Bill Mead of Basic IP wrote an article entitled “The 6 Stages of Inventors” which sums up the state of most current processes. Mead notes several social norm based problems with this ranking process. He writes “Many patent committees will not select patent applications based only on merit. A variety of other factors can crowd merit off the table. For example, "This is this inventor's first disclosure, we need to file on it to encourage them." Or, "We are not getting enough disclosures in this area of technology, we need to file on this disclosure because we need more coverage here." Or, my personal favorite non-merit-based reason "Might be useful." When taken with the "This person has more than his/her fair share of patent applications this meeting" norm that inventor mentors have inflicted on them, these seemingly innocuous norms can become an oppression to your company's best and brightest inventors, your inventor mentors.”

In considering the patent idea ranking, both from an employee effort perspective and a idea merit perspective, there is room for improvement. I suggest a way to move to a more democratic and meritcratic process may be to insert the social networking backbone of sites like digg.com into the searching or idea ranking process. In doing so the structure of such a system could have the following structure and benefits:

- Ideas get 1 vote per [qualified] employee (anyone is free to rank, but that may cause more issues than having a larger set of qualified rankers). The goal here is to get people involved and build innovation (and ranking of what is good/bad idea) into the culture.

- Ideas are submitted by anyone, but all inventors (theoretically the entire R&D department and beyond) are encouraged to use this innovation database as a pool of ideas to build on, or see what others are working on. The goal here is to expand the idea database into a true working innovation/brainstorming database. This will have an end result as to promote innovation within development as a culture, and give opportunities for anyone to build on ideas.

- Ideas are commented on or added to by anyone. The goal is to spread the ownership of the idea to more people across the organization, which would in build a cycle of buy-in of the new ranking system.

- Inventors get relatively instant feedback as to if the idea is valuable, or needs work. The goal is to speed up and make more valuable the feedback cycle, which will result in increased motivation to file ideas, and better ideas submitted over the long term.

Over time these characteristics would develop an idea raking process that is used in two distinct employee groups - the ranking committee and the inventors.

- Ranking Committee: the ranking committee could meet, already having a rough outline of the ranked ideas to move forward. While most employees don’t have the perspective of the ranking committee – a combination of legal, business and technology reasons to pick the ideas they do – it will give a base to start from (thereby reducing work), ultimately fostering a process where ideas to be ranked mostly on merit. On the flip side, having everyone see what was highly ranked, and what eventually was patented gives feedback and training to everyone at a more global level, ‘what was actually important, and why.’

- Inventors: The employees can use the idea/ranking database as a incubation center in the product development process. Integrating this tool into the actual R&D process automatically begins to ingrain the culture of IP into the employees. Development is done and without any effort, the ideas are already populated into a database for ranking and filing.

I could see this being more valuable the larger the organization, which makes sense because the smaller organizations are more nimble and ranking should be easier to begin with. It could also be very valuable in organizations that have R&D spread across multiple locations. As I noted earlier the best residual effect of this improved process would be the fostering of collaboration and inserting IP right into the fabric of the development culture.

Any comments on if / how this can workout?

Posted by

Peter Cowan, P.Eng, MBA

at

8:38 PM

2

comments

![]()

Labels:

business,

process,

strategy

DiggIt!

Del.icio.us

Thursday, September 21, 2006

Strategic Patenting Decisions and their Influence on Patent Value

As promised, here is part III –Which Patent Variables are indicators of Patent Value?

Again I ask, why is this important for the practicing attorney? As one who prosecutes patents and gives advice to clients on which applications to pursue and which to let abandon, this will give scientific weight to your advice.

In doing so I will answer the research question: For firms what early strategic patenting decisions around the patent itself will impact the future value of the patent?

In my previous posts I looked at the 6 independent variables, all of them measured against the dependant variable of Forward Citations, which was used as a proxy for Patent Value.

The Statistical Analysis:

I performed a hierarchical regression analysis to test both the hypothesis with the enter method for the control variables and the stepwise method for the independent variables. The analysis was sorted across large and small firms to enable a comparison as well as to allow for detailed analysis of small firm patent characteristics. In controlling across Firm Experience and Industry, a hierarchical regression analysis was performed using predictors of Family Size, Breadth, Claim Count, Jurisdiction Count, Priority Basis and Provisional Basis.

The Results:

The results show support for the hypothesis for both small and large firms at the p < 0.05 level. For the small firm the model was significant at the p-value < 0.05 level for the independent variables of Industry Type, Priority Claim and Breadth of Patent whereas the Large firm had significance at the p-value < 0.05 level for the independent variables of Industry Type and Number of Claims. The R2 was 32% for the small entity, but only 9% for the large entity. This empirical result show support for the research question in that there are internal predictors that are associated with the value of the patent. However it should be noted that this implies that while predictors of significance were found for both small and large firms, it is more so the predictive results for small firms that should be contemplated for inclusion into business practices by industry.

Take Away Points:

How can I apply this to my firm? Small firms can benefit from this research by seeking to increase the value of their future patent portfolio by filing new patents that do not claim priority to other applications, yet cover a broad scope of technology.

How should I change my filing strategy? Dont' just churn them out. Simply having a patenting strategy that is focused on creating large patent portfolio counts, or having a standard procedure to patent all innovations across a pre-defined batch of jurisdictions will not necessarily lead to a portfolio with a high volume of valuable patents. They must consider each new innovation separately and make filing decisions accordingly and not based on pre-determined business decision procedures.

Can small firms really make a difference by patenting things? Does their lack of patenting experience matter? Although small firms have less patenting experience than large firms as seen by their experience distribution plots, surprisingly the firms patenting experience was not a significant predictor in the model. This suggests to industry that small firms with little or no patenting experience still have potential to create valuable patent portfolios from inception.

Posted by

Peter Cowan, P.Eng, MBA

at

8:38 PM

2

comments

![]()

Labels:

research,

thesis

DiggIt!

Del.icio.us

Saturday, July 29, 2006

Patent value & court decisions

A recent bump in traffic has come from someone posting about my citation/value research in Silicon Investor.

One reply to the thread said this : "interesting blog... It is, and goes back to the Glimstedt study of citations, but I'd be happier if he were listing court decisions based on the citation method of valuation."

Actually the citation method is tied to court decisions, it was just not the focus of this particular research paper. I am only posting a brief summary of the full paper here, hence you didn't see the link back to the references which ties litigated patents (which I will loosely interchange with court decisions here) and citations. For your reference here's the portion of my research that ties them together:

It has been suggested that given the high cost of litigation, patents which are litigated are also typically considered valuable patents. Research with litigated patents have been correlated to high patent citations (Lanjouw and Schankerman 1997; Allison, Lemley et al. 2004) with Allison arguing strongly for the bi-directional relationship between litigated patents and value. Their research argument is based on the premise of the high relative cost of litigation as compared to the cost of merely obtaining the patent with almost 75x the cost to enforce through litigation compared to filing.

An interesting step in the research would be to dive more deeply into the litigated patents and pull out the citation counts, ultimatly seeing if there is a model that can be developed for monetary settlements and citations.

References above:

Lanjouw, J. O. and M. Schankerman (1997). "Stylized facts of patent litigation: value, scope, ownership." NBER Working Paper (No. 6297).

Allison, J. R., M. A. Lemley, et al. (2004). "Valuable Patents." Georgetown Law Journal 92(3): 435.

Posted by

Peter Cowan, P.Eng, MBA

at

8:37 PM

1 comments

![]()

Labels:

academia,

research,

thesis

DiggIt!

Del.icio.us

Saturday, July 8, 2006

Patent data: foreshadowing of value to come

As promised, here is part II – Patent Variables to measure internal patent value.

Why is this important for the practicing attorney? As one who prosecutes patents and gives advice to clients on which applications to pursue and which to let abandon, this will give scientific weight to your advice.

First I will give a short background on how I “measured value” objectively for the patents in my data set.

Dependent Variable, or “measured value”: Forward Citation Counts

The dependent variable, forward citations, is measured by the number of citations the granted patent has from other patents. The variable was operationalized by counting the frequency of citations that the particular patent receives from subsequent patents as filed in the USPTO. I also controlled for self-citing.

Is this valid? I propose it is – Starting as early as 1990 forward citations have been validated by other research, showing that a general conceptual patent value or quality definition can be linked to the number of citations and external references it receives. The reasoning for the use of citations as a measure of value is the same for scientific literature: the economic importance of a work should be correlated with the frequency to which it is cited as a benchmark for further developments. It has been suggested that given the high cost of litigation, patents which are litigated are also typically considered valuable patents, and research with litigated patents have been correlated to high patent citations.

Independent Variables: Strategic Patenting Decisions

I am suggesting that each of these variables has an influence into the final value of a patent.

Family Size. Family size is all related applications (continuations, continuation-in-part and divisionals) filed worldwide.

Breadth. Patent breadth or scope is a measure of the technological influence or boundaries that the patent encompasses.

Claim Count. Claims define the legal boundaries of the patent rights, thus the firm typically has incentive to claim as much and as widely as possible while the examiner may narrow or reduce the claims to ensure validity before granting.

Jurisdiction. Jurisdiction size is computed as the number of separate country jurisdictions in which patent protection was sought for the same application.

Provisional Basis. Provisional applications allow an inventor to file information relating to their invention claim priority to that filing date up to 1 year from the original provisional filing date

Priority Claim. Priority claim is the basis of whether the patent application was based on a previous application, such as a continuation or divisional.

If you wish to see how I operationalized any of these variables let me know and I will post some additional details.

Next up: Part III – Results How did each of these independent variables fair when put to the statistical tests? And, more to the point, how can we apply this from a patent filing strategy perspective?

Posted by

Peter Cowan, P.Eng, MBA

at

8:37 PM

1 comments

![]()

Labels:

research,

thesis

DiggIt!

Del.icio.us

Thursday, April 27, 2006

New way to look at value of patents?

First off - let me apologize for the lengthy delays between postings. I was hoping for at least 2 a week when I started this site but other non-work obligations mean this site will fall to the bottom of my pile for at least another few months. I will keep you posted...

In the mean time, here is something to ponder:

Institute of Physics put out a news article on entitled "Google unearths physics gems". It outlines how the Google page rank algorithm is being used to find, and in some cases uncover, important scientific papers. On a personal note I used the google scholar extensively when doing research for my thesis last year and found it at least as valuable as, if not more, than some of the traditional searches such as ebscohost et al.

My question for the post is this: How can Google's page rank algorithm be used in patent searching and ranking, specifically to help do accurate prior art searches? How can it be used to help determine value of a filed or granted patent?

I think that is something worth postulating... Perhaps for my next thesis. Any thoughts or ideas would be interesting to hear...

Posted by

Peter Cowan, P.Eng, MBA

at

8:36 PM

0

comments

![]()

Labels:

business,

research

DiggIt!

Del.icio.us

Saturday, February 18, 2006

Patenting differences between large & small firms

My research interests lie in the patenting differences between large & small firms – and more importantly how can a small firm with relatively smaller resources get “more for their money” by having more valuable patents.

Those in the patent field realize that small firms have less experience than large firms, as measured by filed applications – but by enough to statistically compare the two groups? We must determine that before moving forward.

Data Source: The data collected was tested using patent data collected for US patents granting in January 1999[1] within the International (IPC) patent classes related to Electrical Devices (H01) and Mechanical Devices (F16). These particular classes were chosen as they were relatively opposite technically based categories allowing for a clear comparison across industries. A total of 847 samples drawn from the USPTO with both expired patents and those granted to non-US based firms removed from the sample. The remaining 386 samples were coded into Small and Large Entity status, as listed by each patent application record available from the USPTO.

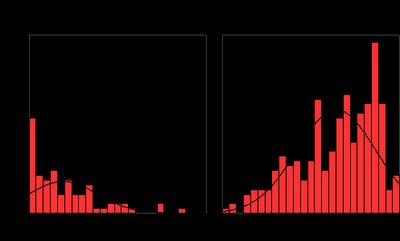

Small and large firms patenting experience distribution, as measured by filed applications.

With negative skewness (-0.58) and kurtosis (-.457) for the large firms and positive skewness (1.25) and kurtosis (1.452) for the small firms, it can be seen that small firms have less experience than large firms, as measured by filed applications

(Sorry the background is black but SPSS was doing wierd output that I couldn't fix when importing into blogger - you need to click and open it to see the graph.)

So, with this difference in experience established in statistics we can move to comparing the two small & large firms patents.

Next: Part II – Patent Variables to measure internal patent value. I will be using claim count, patent breadth, patent jurisdiction size, number of claims, provisional basis and priority claims as my variables of value.

[1] A data-set from pre-November 29, 2000 was also consciously chosen as the law of the US now requires all US patent applications filed on or after this date to be published 18 months after the earliest filing date for which the benefit is being sought, unless the application will not be filed in a foreign country that provides for 18-month publications. Data collected after this date would cause issues as there would effectively be 2 data sets to consider: citations granted applications that had an earlier publication date and citations for granted applications that were not published.

Posted by

Peter Cowan, P.Eng, MBA

at

8:35 PM

0

comments

![]()

Labels:

research,

thesis

DiggIt!

Del.icio.us